The cloud gives us many opportunities to quickly set up new infrastructure components. As an agency, it is important to us to be able to act independently, which is why we rely on Terraform instead of CloudFormation, for example, which is only suitable for AWS

We have set up a staging system for a customer, which provides an AKS cluster in the Azure cloud to be able to test different feature branches in parallel and, in a further iteration, to enable A/B testing. We have mapped the required infrastructure with Terraform because it gives us some advantages:

- The environment can be shut down at night or weekends and rebuilt through the pipeline to save costs.

- The same environment, for example a production environment, can be created in parallel without great effort.

- Problems caused by manual configuration and "clicking together" are eliminated. All necessary, security-relevant components are generated by Terraform, for example database passwords and certificates and of course the Kubernetes cluster.

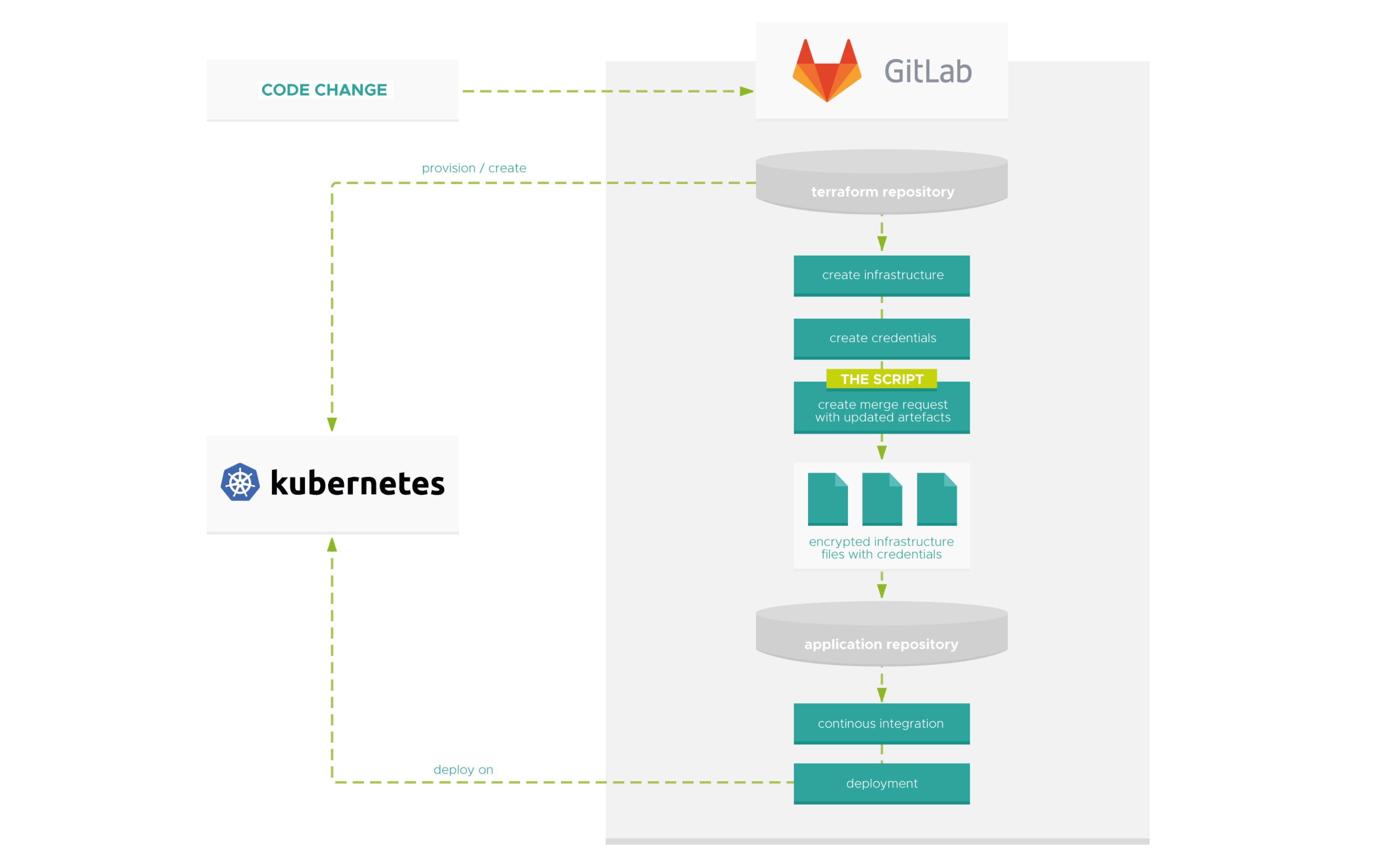

But how do all these variables find their way back into the pipelines of the services that are actually supposed to be deployed on the cluster? And also protected from prying eyes? GitOps is the keyword. The following script is running in the cluster creation pipeline

TL;DR

The script clones the specified repositories, replaces the values in the JSON files with the current values (line 76, 4) and opens a merge request in the corresponding app repositories via the git push options.

TC;DU

too complicated, didn't understand Since this is security-sensitive data, we have encrypted the relevant files with Mozilla's sops</a >

Unlike GitCrypt, it offers us the possibility to encrypt only the values instead of the complete file. This makes changes easier to see.

GitLab supports various git push options, with which not only merge requests can be created but also automatically accepted with merge_request.merge_when_pipeline_succeeds as soon as the pipeline has run successfully. Here you will find all options that can be useful for scripting.< /a>

Ideally, these pipelines should do a smoke test to find out whether the new configuration is suitable, so that everything else can then be deployed on the new cluster. Here is an example with Sonobuoy

Disclaimer

This is a low-overhead approach that may not be appropriate for every use case. An alternative could be to use Hashicorp Vault. Vault takes care of managing sensitive data and can even dynamically inject secrets into a Kubernetes pod. Here is a blog post about it. To include more cross repository To complete tasks with GitLab, we recommend this blog post.

Sops can also be used directly with Terraform.